By KIM BELLARD

If you went to business school, or perhaps did graduate work in statistics, you may have heard of survivor bias (AKA, survivorship bias or survival bias). To grossly simplify, we know about the things that we know about, the things that survived long enough for us to learn from. Failures tend to be ignored — if we are even aware of them.

This, of course, makes me think of healthcare. Not so much about the patients who survive versus those who do not, but about the people who come to the healthcare system to be patients versus those who don’t. It has a “patient bias.”

Survivor bias has a great origin story, even if it may not be entirely true and probably gives too much credit to one person. It goes back to World War II, to mathematician Abraham Wald, who was working in a high-powered classified program called the Statistical Research Group (SRG).

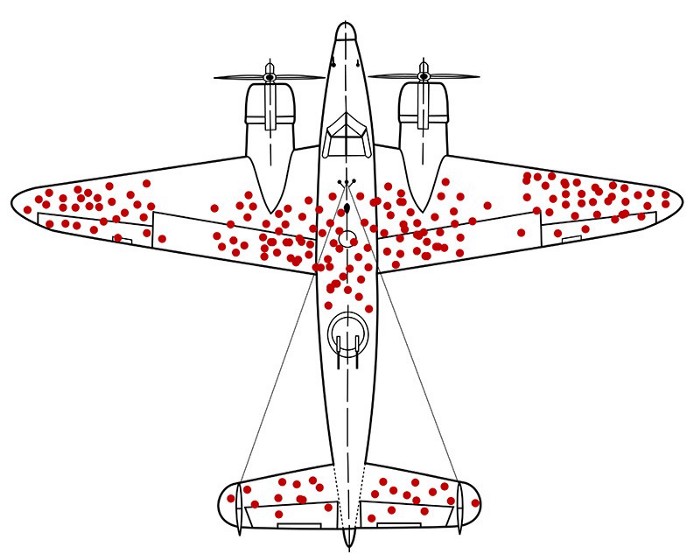

One of the hard questions SRG was asked was how best to armor airplanes. It’s a trade-off: the more armor, the better the protection against anti-aircraft weapons, but the more armor, slower the plane and the fewer bombs it can carry. They had reams of data about bullet holes in returning airplanes, so they (thought they) knew which parts of the airplanes were the most vulnerable.

Dr. Wald’s great insight was, wait — what about all the planes that aren’t returning? The ones whose data we’re looking at are the ones that survived long enough to make it back. The real question was: where are the “missing holes”? E.g., what was the data from the planes that did not return?

I won’t embarrass myself by trying to explain the math behind it, but essentially what they had to do was figure out how to estimate those missing holes in order to get a more complete picture. The places with the most bullet holes don’t suggest those are the areas that need more armor, because, obviously, those planes can absorb hits to those parts and still make it back. It turned out that armoring engines was the best bet. The military took his advice, saving countless pilots’ lives and helping shorten the war.

That, my friends, is genius – not so much the admittedly complicated math as simply recognizing that there were “missing holes” that needed to be accounted for.

Jordon Ellenberg, in his How Not To Be Wrong, posed another example of survivor bias – comparing mutual funds’ performance. You might compare performance over, say, ten years:

But something’s missing: the funds that aren’t there. Mutual funds don’t live forever. Some flourish, some die. The ones that die are, by and large, the ones that don’t make money. So judging a decade’s worth of mutual funds by the ones that still exist at the end of the ten years is like judging our pilots’ evasive maneuvers by counting the bullet holes in the planes that come back.

Healthcare has lots of data. Every time you interact with the healthcare system you’re generating data for it. The system has more data than it knows what to do with, even in a supposed era of Big Data and sophisticated analytics. It has so much data that its biggest problem is usually said to be the lack of sharing that data, due to interoperability barriers/reluctance.

I think the bigger problem is the missing data.

Take, for example, the problem with clinical trials, the gold standard in medical research. We’ve become aware over the last few years, that results from clinical trials may be valid if you are a white man, but otherwise, not so much. A 2018 FDA drug trial analysis found white made up 67% of the population but 83% of research participants; women are 51% of population but 38% of trial participants. There’s important data that clinical trials are not generating.

Or think about side effects of drugs or medical devices. It’s not bad enough that the warning labels list so many possible ones, without any real ranking of their likelihood, but what’s worse is that those are only the ones reported by clinical trial participants or others who took the initiative to contact the FDA or the manufacturer. Where are the “missing reports,” from people who didn’t attribute them to the drug/device, who didn’t know/take the initiative to make a report, or were simply unable to?

Physicians often try to explain to prospective patients who they might fare post-treatment (e.g., surgery or chemo), but do they really know? They know what patients report during scheduled follow-up visits, or if patients were worried enough to warrant a call, but otherwise, they don’t really know. As my former colleague Jordan Shlain, MD, preaches: “no news isn’t good news; it’s just no news.”

The healthcare system is, at best, haphazard about tracking what happens to people after they engage with it.

Most important, though, is data on what happens outside the healthcare system. The healthcare system tracks data on people who are patients, not on people when they aren’t. We’re not looking at the people when they don’t need health care; we’re not gathering data on what it means to be healthy. I.e., the “missing patients.”

Our healthcare system’s baseline should be people while they are healthy – understanding what that is, how they achieve it. Then it needs to understand how that changes when they’re sick or injured, and especially how their interactions with the healthcare system improve/impede their return to that health.

We’re a long way from that. We’ve got too many “missing holes,” and, like the WWII military experts, we don’t even realize we’re missing them. We need to fill in those holes. We need to fix the patient bias.

Healthcare has a lot of people who, figuratively, make airplanes and many others who want to sell us more armor. But we’re the pilots, and those are our lives on the line. We need an Abraham Wald to look at all of the data, to understand all of our health and all of the various things that impact it.

It’s 2022. We have the ability to track health in people’s everyday lives. We have the ability to analyze the data that comes from that tracking. It’s just not clear who in the healthcare system has the financial interest to collect and analyze all that data. Therein lies the problem.

We’re the “missing holes” in healthcare.

Categories: Health Policy

Really interesting post. I guess in a clinical trials context, the trialists manage survivor bias by analysing outcomes on an intention-to-treat basis, thereby avoiding the issues of loss to follow-up. But unpublished trials is a big issue, and systematically so is non-reporting of off-label and individual patient uses.