By KIM BELLARD

If you went to business school, or perhaps did graduate work in statistics, you may have heard of survivor bias (AKA, survivorship bias or survival bias). To grossly simplify, we know about the things that we know about, the things that survived long enough for us to learn from. Failures tend to be ignored — if we are even aware of them.

This, of course, makes me think of healthcare. Not so much about the patients who survive versus those who do not, but about the people who come to the healthcare system to be patients versus those who don’t. It has a “patient bias.”

Survivor bias has a great origin story, even if it may not be entirely true and probably gives too much credit to one person. It goes back to World War II, to mathematician Abraham Wald, who was working in a high-powered classified program called the Statistical Research Group (SRG).

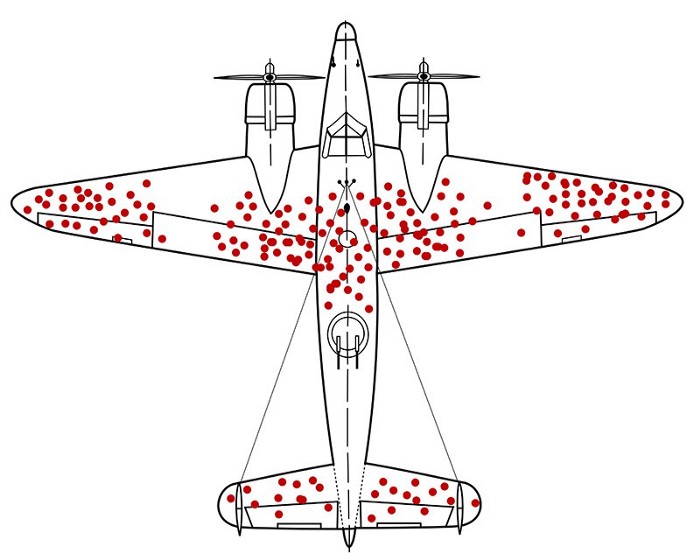

One of the hard questions SRG was asked was how best to armor airplanes. It’s a trade-off: the more armor, the better the protection against anti-aircraft weapons, but the more armor, slower the plane and the fewer bombs it can carry. They had reams of data about bullet holes in returning airplanes, so they (thought they) knew which parts of the airplanes were the most vulnerable.

Dr. Wald’s great insight was, wait — what about all the planes that aren’t returning? The ones whose data we’re looking at are the ones that survived long enough to make it back. The real question was: where are the “missing holes”? E.g., what was the data from the planes that did not return?

Continue reading…