The phrase “healthcare data” either strikes fear and loathing, or provides understanding and resolve in the minds of administration, clinicians, and nurses everywhere. Which emotion it brings out depends on how the data will be used. Data employed as a weapon for purposes of accountability generates fear. Data used as a teaching instrument for learning inspires trust and confidence.

The phrase “healthcare data” either strikes fear and loathing, or provides understanding and resolve in the minds of administration, clinicians, and nurses everywhere. Which emotion it brings out depends on how the data will be used. Data employed as a weapon for purposes of accountability generates fear. Data used as a teaching instrument for learning inspires trust and confidence.

Not all data for accountability is bad. Data used for prescriptive analytics within a security framework, for example, is necessary to reduce or eliminate fraud and abuse. And data for improvement isn’t without its own faults, such as the tendency to perfect it to the point of inefficiency. But the general culture of collecting data to hold people accountable is counterproductive, while collecting data for learning leads to continuous improvement.

This isn’t a matter of eliminating what some may consider to be bad metrics. It’s a matter of shifting the focus away from using metrics for accountability and toward using them for learning so your hospital can start to collect data for improving healthcare.

Data for Accountability

Data for accountability is a regulatory requirement. It’s time consuming and can be less than perfect. But it has made a difference in some areas. Readmission and cancer rates are decreasing and patient satisfaction scores are increasing.

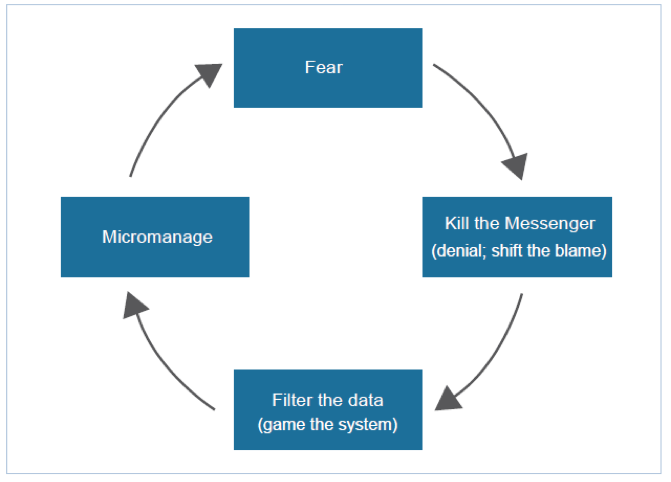

But an accountability model of results improvement places the focus on people rather than processes. This watchdog approach tends to make many people defensive, resistant to learning, and impedes continuous improvement. It initiates the cycle of fear, as first described by Scherkenbach (Figure 1), where fear of repercussion leads to denial and blame shifting, followed by other negative behaviors that continue to produce a culture of fear. Yet most errors that jumpstart this cycle are the result of flawed processes rather than flawed individuals.

Figure 1: The Cycle of Fear (Scherkenbach, 1991).

Data for accountability can be not only punitive, but also detrimental toward improving outcomes. For example, a well-known CMS core measure is 30-day, all-cause, risk-standardized readmission rate following hospitalization for heart failure. In the process of complying with this metric, it’s possible that patients who should be back in the hospital are not being readmitted. In the process of hitting a metric for administering a beta-blocker therapy, it’s possible to obscure notes to ensure a favorable entry into a patient’s record.

Data collection for accountability is time consuming. According to a survey by Health Affairs, physician practices in four specialty areas spend more than 785 hours a year reporting on quality measures. Staff spends 12.5 hours and physicians spend 2.6 hours per week on this work. And when anticipating reward or punishment based on a metric, healthcare systems dwell excessively on data accuracy. A lot of effort goes into determining which patients should or should not be included in any given metric.

Deming and Over-Emphasis of Numbers

Deming said the right amount of effort focused on a process improves it. The right amount of focus means recognizing the many different factors that play into a number and learning what you can, but not over-emphasizing the importance of that number.

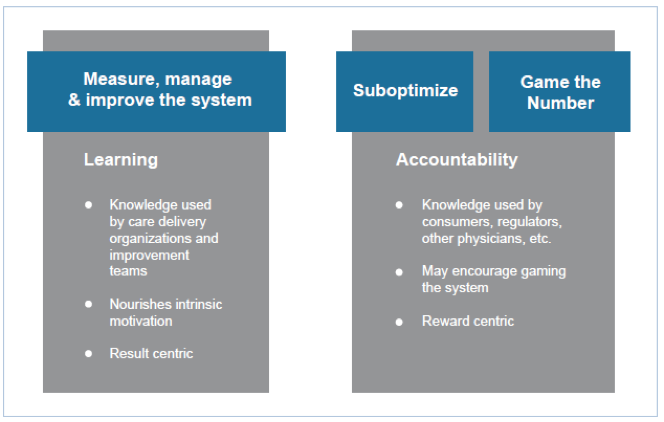

Too much focus sacrifices quality in other areas. For example, say there are five key factors that impact diabetes care, but only one is tied to an accountability report or a bonus metric. The other four factors may suffer because clinicians are focusing on the one key metric, rather than focusing on all five metrics that impact the patient’s health. This is sub-optimization, a negative attribute of collecting data for accountability.

Because of the number of regulatory metrics, many organizations sub-optimize them given their time limitations, even though other metrics have greater impact on cost of care and improving patient health. Organizations can’t focus on improving the more relevant metrics because they sub-optimize on the regulatory metrics that hold them accountable for compliance.

Extreme focus results in gaming the system by manipulating numbers. There are a variety of disingenuous ways to game the system because too much emphasis is placed on the metric versus the improvement: forging documents, stretching the truth, burying notes, refusing to see patients that could negatively impact a metric. Gaming the system goes to the point of cheating. Processes aren’t improved at all, only the reporting is.

If organizations put as much effort into improving the process as they do with documenting their results, they wouldn’t need the regulatory metric in the first place. To avoid penalties down the road, they spend thousands of hours improving a definition of accountability, when the time could be much better spent improving processes.

Figure 2: Ways to get a better number (“Healthcare: A Better Way,” 2014).

This is like the student who studies just to pass the test, but never gains any knowledge. He is memorizing answers to specific problems without realizing that the whole reason for the test is to understand physics or philosophy, not just how to get an A. When healthcare workers go back and alter data, it doesn’t improve patient care.

Data for Improving Healthcare

Data for improving healthcare, or data for learning, requires an internal strategy that addresses specific clinical process improvement areas, and quality and cost control areas. While accountability data is driven by external reporting requirements, clinical or operational data for outcomes improvement is driven by a strategy to better understand a process and the root cause of process failures. This data analysis increases understanding of patient populations, as well as clinical and cost outcomes, by understanding process variation that leads to different results.

Data that’s tracked for establishing a baseline, and then for comparing to the baseline to determine whether or not an improvement occurred, is data for learning.

If the focus is on learning versus reporting, then the data can be so much more revealing. Let’s look again at a cohort of heart failure patients. If we measure whether patients are taking a medication simply to track, learn, and increase medication compliance to benefit patients, then the data’s purpose changes. It becomes about learning and improvement.

Data for Learning Has its Drawbacks

The people and processes involved with collecting data for learning can still be subject to some of the pitfalls associated with collecting data for accountability. A lot of time is spent reporting on quality metrics, often at the expense of improving things. Too often, the focus is on simply reporting or achieving a ranking. It’s up to each healthcare organization to use data and metrics effectively to learn about its own processes.

Time is also a factor with data for learning. Too often, perfect data becomes the goal for learning and improvement work, when all that’s needed is sufficient data that can reveal when a new process is better than the old process. Clinicians may sometimes shoot for a level of data accuracy that’s only required of audited financial reports, or a double blinded study, when all they need to know is if a new process is helping a patient more than the old process. Should a new process be leveraged for discharge or is an existing process better? A quick solution might be to setup an A/B test of two quasi-experimental designs, try them in two units for a month, get rough numbers, and then figure out which process works better. A service line could start using those kinds of numbers without being 100 percent accurate. Eighty percent can still be very useful, and much more cost effective.

Developing New Measures for Learning

Eventually, the industry will adopt new measures that matter most to patients, such as how quickly someone can return to work following hip replacement surgery. The International Consortium for Health Outcomes Measurement (ICHOM) focuses on outcomes measures—metrics for learning—and has developed Standard Sets for 13 conditions. They plan to have additional sets that will cover 50 percent of all diseases by 2017. Health Catalyst’s care management applications will be able to capture this type of data, as well as patient-reported outcomes, both subjective and objective.

Accountability vs. Learning: Another Perspective

In January 2003, Brent James, MD, Executive Director of the Intermountain Institute for Healthcare Delivery Research, Don Berwick, MD, the former CMS Administrator, and Molly Coye, MD, the former Chief Innovation Officer for UCLA Health, wrote for the journal, Medical Care, about the connections between quality measurement and improvement. The article describes two pathways for “linking the processes of measurement to the processes of improvement.”

- The Selection Pathway (accountability)

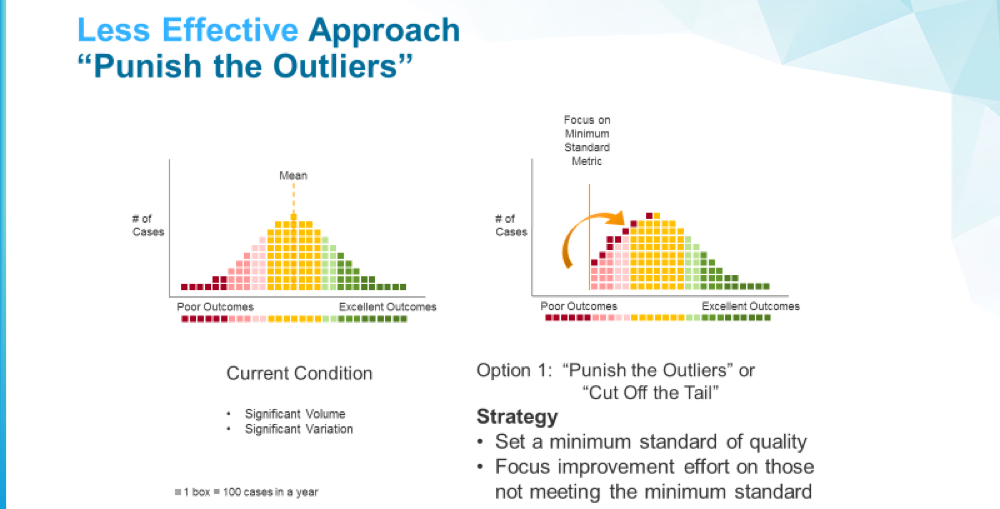

The selection pathway is based on ranking and reputation. Patients select clinicians, employers select health plans, rating agencies accredit hospitals, and doctors refer to other doctors all based on measured performance. The purpose behind this pathway is to not only hold people accountable, but to judge whether a physician or hospital is good or bad. Supposedly, when people self-select away from a bad physician to a good physician, or from a bad hospital to a good hospital, that motivates a change in behavior. This pathway doesn’t lead to improvement by underperformers, but the outcomes of care appear to improve because of this self-selection process.

- The Change Pathway (learning)

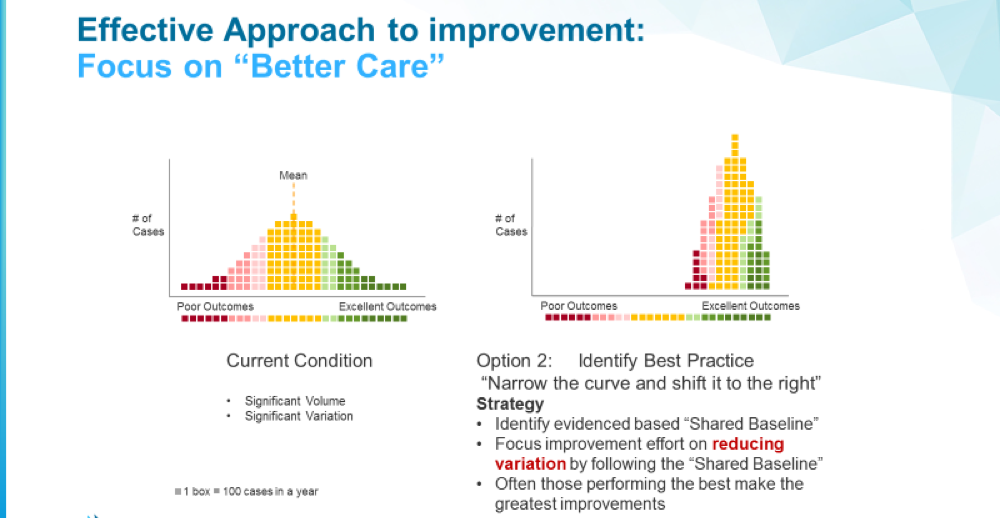

The change pathway goes after understanding processes in order to improve them. People and organizations are not labeled as bad or good; processes are labeled as less or more effective. This way, the data’s purpose becomes how to help an organization learn about the breakdowns and failures in the process, not whether people or organizations are performing poorly.

The article makes this analogy: “A grocery shopper can select the best bananas without having the slightest idea about how bananas are grown or how to grow better bananas. Her job is to choose (Pathway I). Banana growers have quite a different job. If they want better bananas, they have to understand the processes of growing, harvesting, shipping, and so on, and they have to have a way to improve those processes. This is Pathway II.”

Figure 3: In this illustration of accountability (the Selection Pathway), outliers below a minimum standard are identified and reduced, but no real improvement occurs.

Figure 4: In the more effective approach to improvement, the focus is on better care processes to shift the overall curve.

The Synergy of Data for Accountability and Improvement

Regardless of the intent behind data collection, it must be used responsibly. Extravagant executive dashboards can be an overuse of data, especially if no improvement comes from the effort. The diverted effort has nothing to do with outcomes improvement and distracts from other metrics that deserve attention. A bottom-up approach is preferred, where improvement efforts are designed with actual processes behind them, rather than a top-down approach that creates fire drills and produces wasted work.

As James and others wrote: “‘Pathway I’ (Selection) can be a powerful tool for getting the best out of the current distribution of performance. ‘Pathway II’—improvement through changes in care—can shift the underlying distribution of performance itself.”

We are getting smarter about this as an industry. It’s fair to say that both regulatory and clinical metrics can play on the same team. What needs to change is the focus on accountability and judgment so everyone can achieve the desired goal of learning and improvement.

Tom Burton is co-founder and executive vice president of Healthcatalyst.

Categories: Uncategorized

This discussion is eye opening for me. I’m currently in school to obtain my RHIT certification. I suppose I’ve gone in with blinders on thinking that the digitization of information could only serve to improve patient care and also facilitate patient participation in their own care. I had not considered the use of this data to reflect more on productivity versus outcomes for the patient.

Steve2, thanks. EHR systems were built for inputting clinical records and indeed have struggled to deliver reports, analytics or insights. We take a data operating system approach. Our clients have connected over a hundred and forty source systems many of which are EMRs but the data operating system is designed for insights and inquiry, not for billing and documentation. This makes it much easier and faster to identify opportunities for improvement, find the root cause of challenges and design interventions which will significantly improve clinical cost and patient experience outcomes. Thanks for your comment.

techydoc, thanks for the comment. I invite you to take another read of the article and search for ‘process.’ You will see that we view the problem very similarly. Instead of focusing accountability on people, we think a focus on process is the right approach to learn and to improve an entire system. The aim is to fix broken processes, not broken people. Thanks for the comment.

rmcnutt, in our work we have seen many data quality and data collection problems. You can find some of our thoughts on data quality and governance that have helped hospitals and clinics to be successful with these real issues which you have identified. https://www.healthcatalyst.com/knowledge-center/search-results?type=category&target=data-quality-management-governance&kc_post_type=webinar

Not sure what various EMR ownership structures are. I know that it has helped us feel more responsible for outcomes improvement by having ever team member at Health Catalyst be an owner as well as having many of our customers own a portion of Health Catalyst. Thanks for your comment.

John, I completely agree, things have got to change for healthcare to get better. Some EMR vendors, while publicly supporting interoperability, are actively blocking information sharing and tight integration to try to prevent interoperability between vended products perhaps to buy time to build those products themselves. This is hurting patients. We have a lot of hope that the 21st Century Cures Act will open up information sharing between technology vendors for the good of patients. Our legal counsel wrote a bit about it if interested: https://www.healthcatalyst.com/The-21st-Century-Cures-Act-Is-Great-News-for-Healthcare

Ross, thanks for the compliment. You are right this article is just scratching the surface. The secret sauce is how to get people to change, to use these metrics for learning and to establish consistent, dedicated teams around areas of improvement focus. We have developed a tool to illustrate where hospitals and clinics have large processes with significant variation and that analysis allows them to see the biggest opportunities to reduce variation. We have an annual event in the fall where like minded care delivery organizations come together and discuss best practices around their ‘secret sauce’ for massively improving outcomes. Come join us. Hasummit.com. Thanks again for your comment.

All excellent points Greg. We are using natural language processing (NLP), predictive models and machine learning with hospitals and clinics to promote more informed clinical and operational decision making. I like to think of this journey in three step: First, is getting a data operating system infrastructure in place which accelerates data integration. Second, is performing opportunity analysis to prioritize which improvement initiatives will have the biggest bank for the buck. Third, includes both transforming payment structures and transforming care delivery to promote better cost and clinical outcomes. Join us for one of our free educational webinars next week on this very topic if interested. You can find info at https://pages.healthcatalyst.com/2017-02-08HealthcareaiWebinarWebsite.html.

Dr. Gropper, I appreciate your critical questions and comments. I agree with the challenges you have outlined and as we have worked with 50 healthcare systems across the country we have seen many of these challenges manifest themselves. I think a large part of this comes from thinking technology alone can solve problems. There is a cultural mindset shift that has to happen and many times, an organization has built their technology strategy around their EMR. EMRs, at least almost all those on the market today, were originally designed as billing and documentation systems, not systems to accelerate outcomes improvement. The approach that we have seen work well with many health systems across the country is for the analytics or data operating system to become the core technology leveraged by the clinicians to improve care. This includes many of the things you mentioned such as integrating significant data sources that are outside the EMR realm. For example, we have integrated claims data, patient reported outcome data, socio-economic data, wearable device data, patient experience data, etc. into our data operating system giving clinicians a much more comprehensive and panoramic view of whats happening. This also allows us to predict future events and intervene early to avoid bad outcomes.

Another key strategy is leveraging the right modality for delivering information. We need to get away from the static reports and spreadsheets. We have found one of the most effective delivery mechanisms are smart phones and mobile tablets. Some of our newer products delivered this way have seen great engagement and interaction from both patients and clinicians. Thanks for the comments.

Yes metrics have gotten a bad rap by some hospitals leaders who use them to ‘rank and spank’ the under performers which is demoralizing. Wise leaders will focus instead on using metrics to identify broken processes and learn how to fix them. Thanks for the comment.

Data is one of the most important element to make the healthcare campaign successful. This information regarding the data to improve healthcare is much appreciated. Now in the world of digital technology healthcare industry is growing up and becoming a digital. http://www.gatewaytechnolabs.com/healthcare

This is an interesting exercise on theoretically looking at metrics and their purpose. At least improving processes is something most physicians can get behind. As I have said before, metrics are not all bad. The way things should be… metrics should provide useful information. This type of post is helpful to get us there.

The enterprise perspective of this post is too narrow to be useful. Where’s the patient? Are we learning (or measuring the outcome) at the level of an enterprise, a team within the enterprise, the community, or a patient? Where is the data for risk adjustment and social determinants of health factored in? How does the organization treat transparency vs. accountability?

In most cases, the result vs. reward perspective applies to the administrations as purchasers of analytics more than to the clinical teams. The result is a system that’s focused on the unit of commerce and treats both patients and clinicians as inputs to a mostly-secret and competitive process. Learning is very narrowly defined.

Epic contributes to this problem by making it difficult, if not impossible, for the clinical team to adjust and improve it’s own process. Independent decision support at the point of care is unheard-of. Creating an accurate active medications list or coordinating care in a patient-centered sense is almost impossible. The tools are not there and nobody is responsible. Responsibility is shifted to patients through huge out-of-pocket costs even as the tools for transparency are elusive and the networks are narrowed to further disempower the patient.

A large integrated delivery network is a combination of some teams that are centers of excellence under an administrative infrastructure that works overtime to avoid transparency or substitutability for the weak or incompetent components of the enterprise. Data and information technology is a strategic asset and patients (as well as clinicians) are more likely to suffer higher costs and frustration than we like to admit.

Certainly a topic we need to keep discussing. Data for patient risk stratification and care management being key use cases.

CMS, for example, uses HCCs (Hierarchical Condition Categories) to tag patients with medical conditions, usually long-term conditions that impact the patient’s need for care. These codes are driven by the claims submitted to CMS. There are models to detect cases where a patient is missing an HCC code. For example, where a patient has HbA1C results, or insulin orders, but no diabetes HCC code, the model suggests that this code may be missing, a decision that would alter the patient’s care plan, but also reimbursement levels. Inversely, if the patient has an HCC for heart failure but no evidence in the data – no claims, no medications, no mention in notes – the model would suggest to review the case and see if there is a coding mistake or incomplete documentation. To build the HCC model, more than 35 million longitudinal patient charts were assembled.

And following data on a consistent basis can help users understand whether it has any predictive value. I’d place that into a few categories:

▪ Sentiment Analysis: Use the natural language of the known data to build a model with linguistic processing, and then gauge the sentiment associated with the data when people use the language.

▪ Plan for a predictive window: In prescriptive analytics, the notion of a predictive window describes a window of time within which making an error in prediction is acceptable. The most important criteria for actionability is that the reaction time has to be less than the predictive window.

• Use predictive analytics to meet KPIs: When an insight is closely tied to key business goals and strategic initiatives, it’s more likely to drive action. It’s easier to interpret and convert strategically aligned insights into tactical responses because they often relate directly to business levers that can be controlled and influenced.

I think this post is real contribution. My concern is that there’s not enough on how to move/select the metrics AND THE FEEDBACK PROCESSES such that one gets the desired improvements. The graphs showing lopping off the left (bad) vs moving the left to the middle are compelling. But the process is the secret sauce in the holy grail (intentional mixed metaphor alert) that we all want. I repeat, a real contribution.

EPIC is privately held, notoriously so

The issue there is cultural

But allegedly things are changing

Which is a good thing, if the rumors are true

As for EPIC, I have always wondered what percentage of the corporation’s authorized stock was owned by its top three tiers of governance? For a top three EHR systems provider, it sure has an odd ‘temperament.’

Like your post; thank you for pushing ideas. But, the data we collect is biased; it is collected for billing or other nefarious reasons, and there are no standards for clarifying the contextual reasons for the information in the first place. We are like the gleaners of old. We pick up pieces of data collected for reasons distant from our questions. The context of data, the source, the sampling methods, the measures of center and variations, the decisions about outlier data and what it means, and changing patterns over time should be the basics off all data collections. Right now we have piles of manure and we think that we can parse the big piles into better piles, but both still stink. There are better ways to approach care; better ways to consider how to use data and how much is needed. I fear that collecting data or asking questions without explicit scientific principles will lead us nowhere.

I completely agree with Mr. Burton’s premise that we are collecting and using data for the wrong purposes. Capturing for accountability is not new. The health care system has been doing that for decades, including the evaluation and management documentation requirements to justify payment. These documentation requirements have lead to poor usability of health IT and inefficiencies, both robbing resources from patient care. The question is are we destined to repeat our past mistakes with the new accountability methodologies.

I do have an issue with his desired purpose of “learning,” which is used throughout the article. As he constructs his argument I believe he ties learning to individuals, instead of learning at the level of work systems. In my opinion our biggest issues are not gaps in knowledge (at least not ones we can systematically and permanently close) but rather a gap in performance of a work system. [perhaps my issue stems from my mental model of the term learning and not Mr. Burton’s intended meaning of the term]

Rather than the goal of “learning” (at the individual level) it should be of improving the reliability and performance of the work system. If one were not familiar with work systems, I would steer you toward the Systems Engineering Initiative for Patient Safety (SEIPS) model. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3835697/

I am pleased to see The Health Care Blog giving voice this important topic and dialog and for Mr. Burton in furthering the discussion and understanding.

We have EPIC. It is just hard to get good reports from them. I finally think we are getting somewhere but I keep thinking we must be reinventing the wheel. I believe that most practices would want to look at pretty much the same stuff.